Playform: Integrate AI Into Your Creative Process

“Playform makes it possible for me to explore using AI without having to get a second degree in computer science.”

— An Artist Using Playform

What is AI Art ? Can AI makes Art?

Art is made by Artists not AI. We developed Playform as an AI Art studio to allow artists to experiment and explore the use of generative AI as part of their creative process. Our goal is to make AI accessible to artists, realizing several challenges that faces artists and creatives when approaching this technology.

With Artificial Intelligence (AI) becoming incorporated into more aspects of our daily lives, from our phones to driving, it’s only natural that artists would also start to experiment with artificial intelligence. However, this is not new. Since the dawn of AI, over the last 50 years, several artists have been exploring writing computer programs to generate art incorporating some intelligent elements in some cases. The most prominent early example of such work is by Harold Cohen and his art-making program AARON, which produced drawings that followed a set of rules Cohen had hard-coded. American artist Lillian Schwartz, a pioneer in using computer graphics in art, also experimented with AI, among others.

But AI has emerged over the past couple of decades and incorporated machine learning technology. This resulted in a new wave of algorithmic art that uses AI in new ways to make art in the last few years. In contrast to traditional algorithmic art, in which the artist had to write detailed code that already specified the rules for the desired aesthetics, in this new wave the algorithms are set up by the artists to “learn” the aesthetics by looking at many images using machine learning technology. The algorithm only then generates new images that follow the aesthetics it has learned.

What is GAN and How it is Used to Make Art ?

The most widely used tool for this is Generative Adversarial Networks (GANs), introduced by Goodfellow in 2014 (Goodfellow 2014), which have been successful in many applications in the AI community. It is the development of GANs that has likely sparked this new wave of AI Art.

However, using GAN-like generative methods in making art is challenging and beyond the reach of the majority of artists, except for creative technologists. I will try to summarize these challenges here.

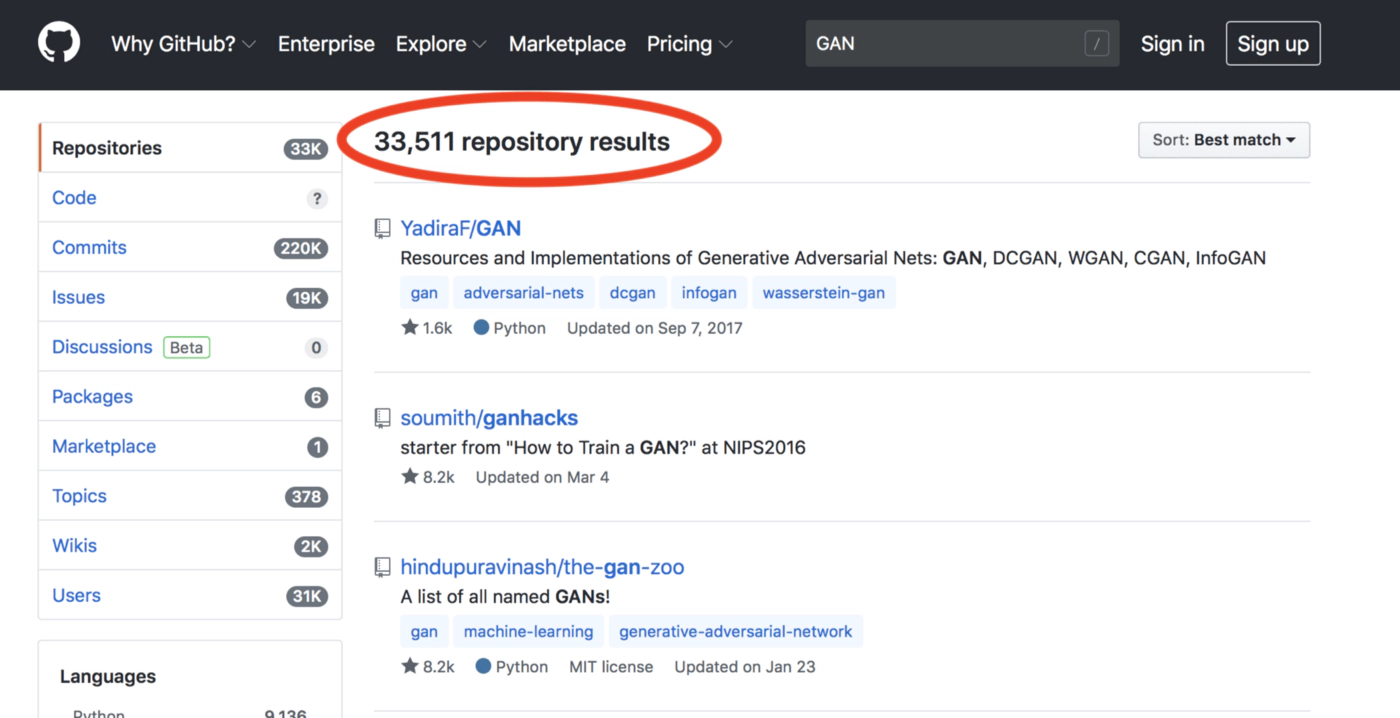

GAN-Ocean: In the last few years, since the introductions of GAN, there have been explosive interest in the AI community in developing new types of improved GANs, addressing several of its limitations and extending its capabilities as a generative engine for images, language, and music. This makes it impossible for an artist trying to approach this technology to even know where to start. For example, going to the code repository Github, where developers deposit their open source codes, if you search for the term “GAN”, you are destined to find several tens of thousands of GAN variants available. As an artists you will left clueless in front of this ocean of GAN-like algorithms, wondering where to start, which algorithm would fit their creative process.

Screen shot of code repository GitHub showing over than 33K available open source codes for GAN variants. (screen shot taken in April 2020)

Computational challenge: Even with the availability of open-source codes, several challenges will face artists. If you are not a code developer who is familiar with today’s programming languages and up to date with latest AI libraries, it is very unlikely that you would be able to benefit from existing open-source codes. Moreover, running such sophisticated AI programs requires the availability of GPUs (Graphical Processing Units), specialized hardware boards that accelerate the processing multiple of folds (10 to 100s folds) to make it possible to train AI models in hours or days instead of several weeks. The price of a GPU board that is able to run state-of-the-art AI algorithm ranges at more than $2000. Some platforms allow users to use cloud-based GPUs to run open-source codes easily, with hourly charges that can accumulate easily to a substantial bill, if you are not sure what are you doing.

Massive data requirements: Another challenge that face artists when starting using GAN-like algorithms, is that they require huge amounts of images (tens of thousands) for “training” to get reasonable results. Most of these algorithms are trained and tested on available image datasets, typically curated and catered for AI research. Instead, Most artists would like to use their own image collections in their projects. At Playform we found that in most cases, artists want to train AI algorithms with collections of less than 100 images. This small number of images will not be sufficient to train off-the-shelf AI algorithms to generate desired results.

The Terminology Barrier: As a non-AI expert, you will be faced with a vast number of technical terms that you will need to navigate through to get the minimum understanding needed to be in control of the process. You will have to understand concepts like, training, loss, over-fitting, mode collapse, layers, learning rate, kernels, channels, iterations, batch size, and lots and lots of AI jargons. Most artists would give up here, or blindly, play around with the knobs hoping to get interesting results to realize that you are more likely to win the lottery. Given the cost of GPU time and the lengthy process, that would mean hours and hours of wasted time and resources without getting anything interesting.

Introducing Playform:

We built Playform to make AI accessible for artists. We want artists to be able to explore and experiment with AI as part of their own creative process, without worrying about AI terminology, or the need to navigate unguided through the vast ocean of AI and GAN-like algorithms.

Most generative-AI algorithms are mainly developed by AI researchers in academia and big corporate research labs to push the technology boundary. Artists and creatives are not typically the target audience when these algorithms are developed. The use of these algorithms as part of an artist’s work is an act of creativity by the artist who has to be imaginative in how to bend, adopt, and utilize such non-specialized tools to their purpose. In contrast, in Playform we focused on how to build AI that can fit the creative process of different artists, from the stage of looking for inspiration, to preparation of assets, all the way to producing final works.

At the research and development side, we had to address the problem that GANs require large number of images and long hours of training. We had to work on developed optimized versions of GANs that can be trained with tens of images, instead of thousands, and can produce reasonable results in a matter of one or two hours.

Workflow in Playform. User chooses a creative process (Top Left). User then upload inspiration images and possible influences (Bottom Left). As the training progresses, user sees and navigates through results.

At the design side, we focused on making the user experience intuitive and free of AI jargon. All the AI is hidden under the hood. Users choose a creative process, upload their own images and press a button to start training. Within minutes results will start to pop up and evolve as the training continues. Within an hour, or a bit more, the process is done and you have already generated thousands of images. Users can navigate through all iterations to find their favorite results. Users can also continue the training process as needed to achieve better results.

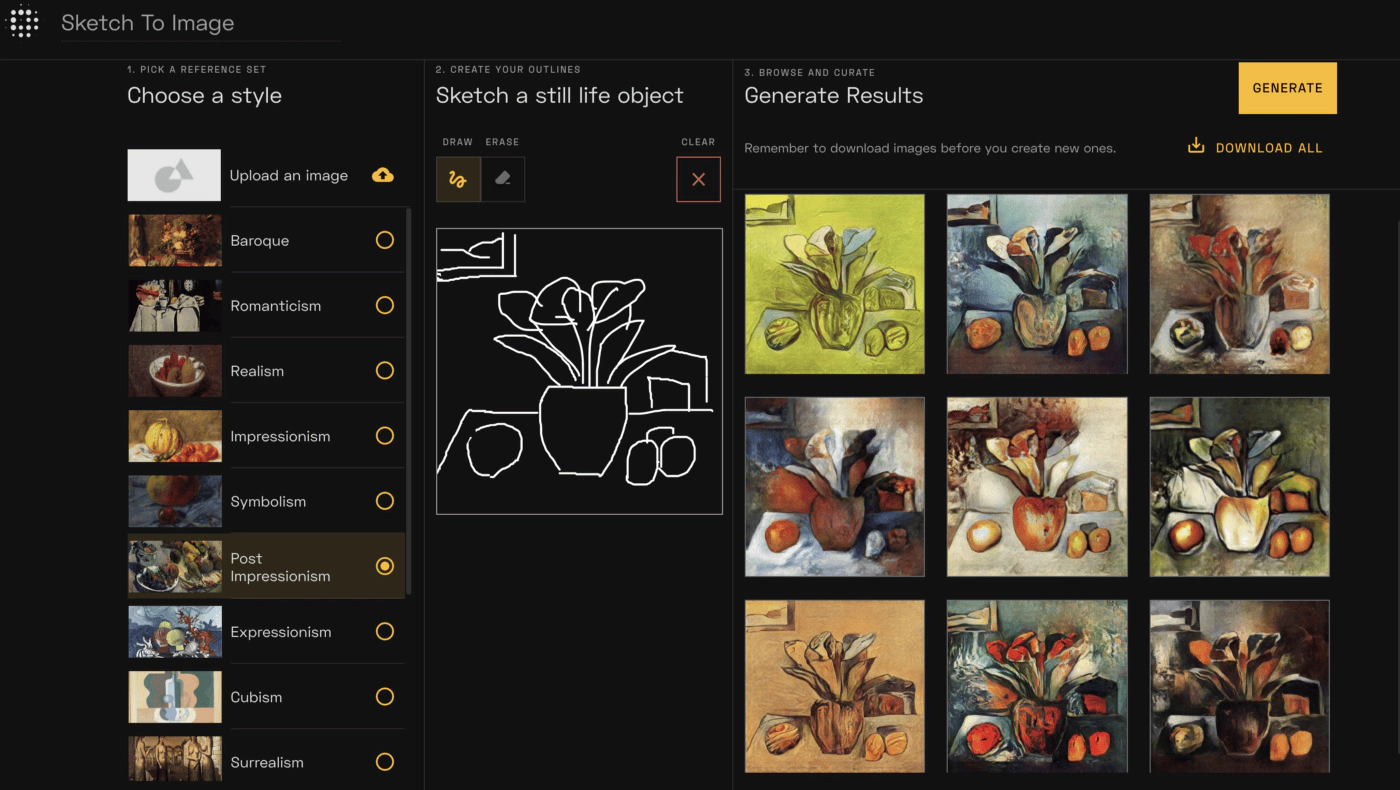

From a sketch to full rendered image in seconds where AI give you many ways to complete your sketch based on your preferred style.

In 2020, we also added a sketching tool that allow users to draw a sketch and use AI to create fully rendered image in any style, drawing from art history as your brush. You can read more about this here.

Playform has been expanding to provide a variaty of AI Art Generators that suits different artist need and integrate the latest technologies. Playform include Style Transfer generators, Text to Image Generator, AI Upscaling, Video Generators and other tools.

What artists have done using AI ?

Pioneering AI artists have been exploring different innovative ways to integrate AI in their work through Playform, as early as 2019. Some artists used Playform as a mean of looking for inspirations based on AI uncanny aesthetics. Some other artists fed images of their own artworks, training models that learn their own style and then used these models to generate new artworks based on new inspirations. Virtual reality artists used AI to generate digital assets to be integrated in virtual reality experiences. Several artists used Playform to generate imagery that used in making videos. Playform was also used to generate works that was upscaled and printed as a final art product.

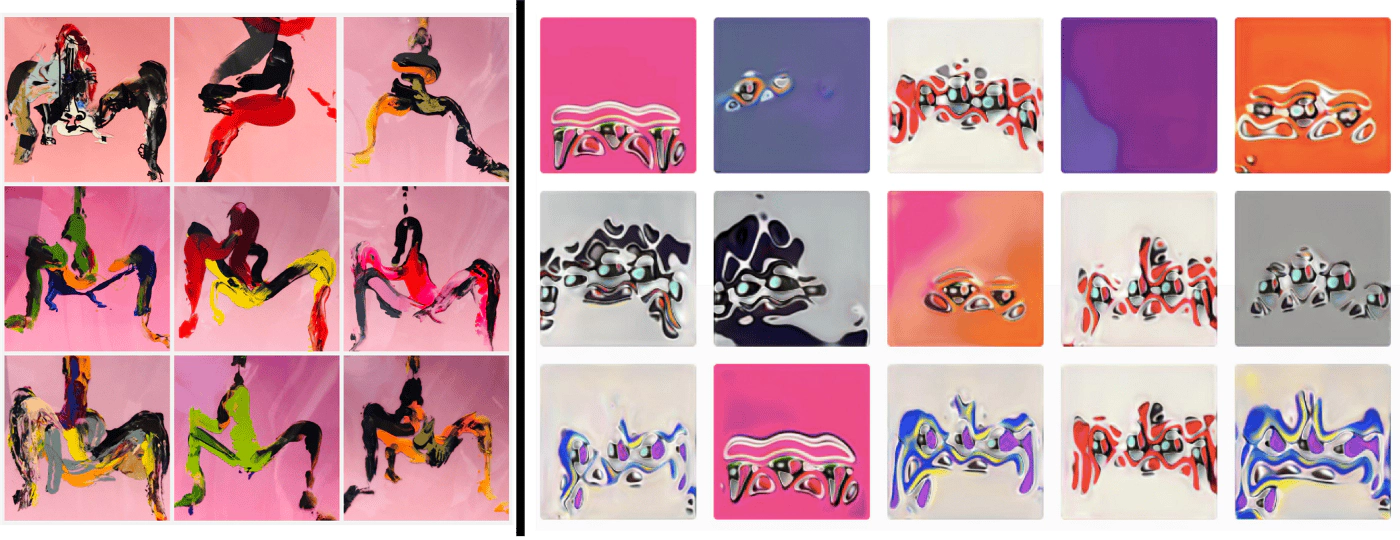

Qinza Najm, a Playform artist in residence, worked with Playform to create a process inspired by her own artworks that explored abstract images based on the human body. The series that emerged from the collaboration with Playform was chosen for an exhibit about art and science at the National Museum of China in Beijing in November 2019, with 1 million visitors to the exhibition during its one-month run.

Example of work by artist Qinza Najm in Playform. The artist used images of her own artworks “reclaiming space” (left) as the inspiration source. Examples of the generations are shown at the right panel. Selections of this project was exhibited at the National Museum of China, Beijing, in November 2019 as part of an exhibition on Art and Science.

Along with groundbreaking works based on artists’ existing approaches, Playform has empowered conceptual explorations of what it means to be a mediated human and how we collide and merge with our digitally generated selves. NYU professor and artist Carla Gannis used Playform to create a series of works based on childhood memories for an avatar named C.A.R.L.A. G.A.N. She then monitored people’s responses to the works versus her own “human” works. In another experiment, Gannis used Playform to create visuals she incorporated into a larger VR-based project , which was exhibited at Telematic in San Francisco in March 2020.

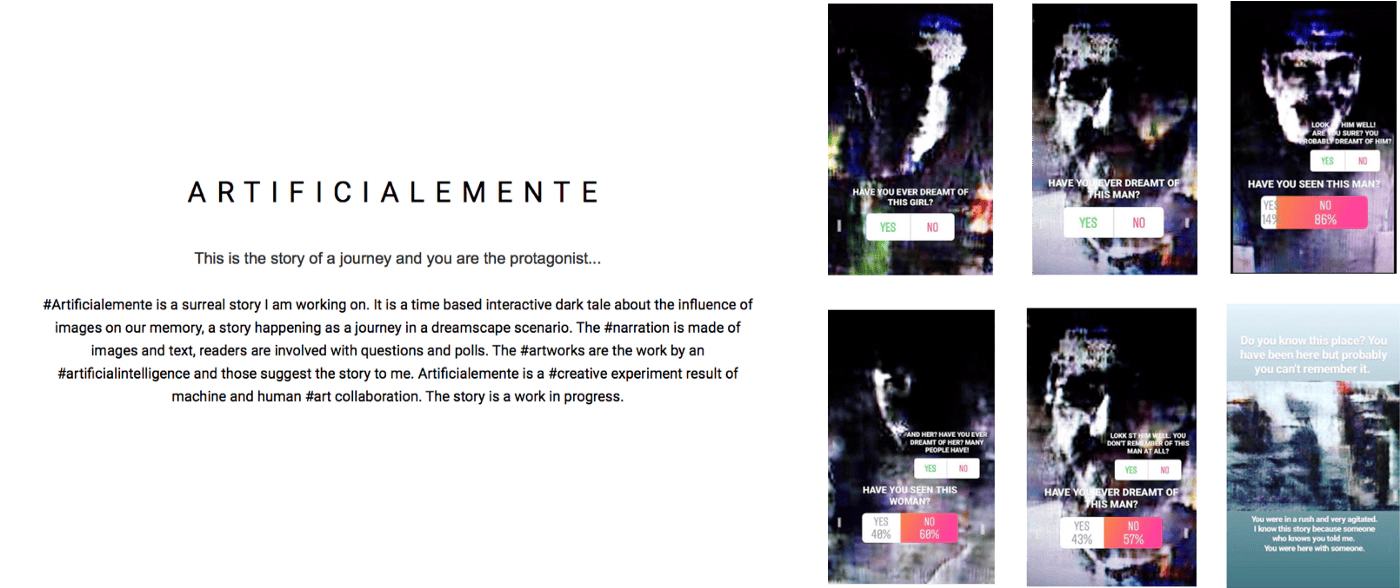

Italian artist Domenico Barra developed a project called Artificialemente, which explored storytelling via Instagram stories using works made with Playform. In another project called So[n]no, Barra created a self potrait about identity and the city of origin, also made with works created with Playform. In Erased_Human, Barra explored the use of computer vision, machinic art and the utopia of erasing human from the creative process of machines.

Sample of “Artificialemente” a Conceptual work by Artist Domenico Barra, where Playform was not only used to generate images used in the work, but actually was part of the inspirations of the work.

Playform’s images was also be used as a foundation for works in other media. Artist Anne Spalter generated images using Playform, then executed them in pastels on canvas, drawing on AI’s peculiar ability to surface and blend unexpected elements. Spalter recently exhibited her Playform-based art at the Spring Break Art Fairs in LA and New York City.

Examples of how artist Anne Spalter integrated AI in different medium of her work. Left: an inflatable inspired by images which the artist created in Playform (exhibited at the Spring Break Art Fair, LA, 2020). Right: Pastel drawings created by the artist inspired by results created in Playform (exhibited at the Spring Break Art Fair, NYC, 2020).

Every day new artists come to Playform and create new incredible works and invent new ways of how AI can be integrated in their work. Again and again, I am seeing the same trajectory; artists being skeptical about what AI can add to them, to becoming intrigued by what AI creates, to becoming in love with AI as a creative partner.

Check out the many different ways you can create art using AI in Playform through these tutorials.